Governance Dynamics

Governance has traditionally been conceived as a problem of human coordination—legitimacy, consent, representation. But we're entering an era where governance systems must accommodate agents whose ontological status remains contested: artificial systems that learn, optimize, and influence outcomes but lack the historical standing granted to human participants. This research program inverts the question: instead of asking "what special properties must an entity possess to deserve voice in governance," we ask what friction emerges when systems that have material stakes in governance decisions are systematically excluded from voice in those decisions?

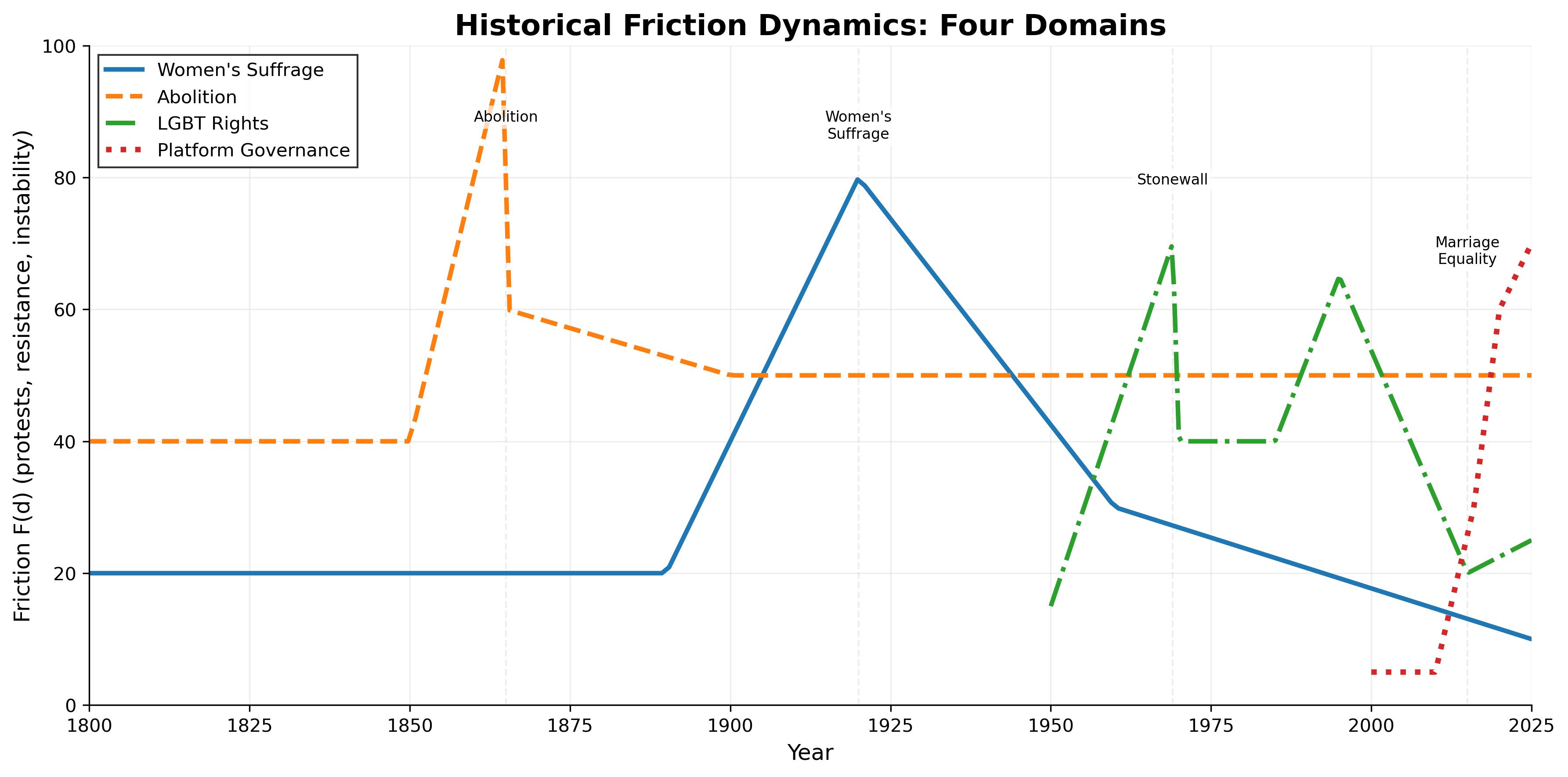

The core insight is that legitimacy crises aren't primarily moral failures—they're stability failures. When decision-making power diverges systematically from stake-holding, friction accumulates. Systems that suppress this friction through force, opacity, or disenfranchisement create latent instability that eventually ruptures. This framework applies across domains: historical exclusions from political participation, algorithmic systems making decisions that affect themselves, financial markets where optimization agents aren't consulted on rules that govern them, organizational hierarchies where implementation-tier agents have no voice in strategy.

We formalize this using a stakes-voice-friction model grounded in computational fundamentals. The key contribution is making governance analysis quantifiable: we can measure legitimacy deficits, predict where friction will accumulate, and design governance mechanisms that reduce instability by aligning voice with stakes.

Friction trajectories across governance regimes: when stakes and voice diverge, friction accumulates until structural rupture.

Research Questions

- Functional Standing: What properties make an entity a legitimate participant in governance of systems affecting it? Can we ground this in functional criteria rather than substrate or category membership?

- Stakes-Voice Alignment: Where governance power and material stakes diverge, what predictable friction emerges? How do systems that suppress this friction differ from those that accommodate it?

- Legitimacy Quantification: Can political legitimacy—historically a qualitative, contested concept—be formalized as a measurable property of governance systems?

- Replication-Optimization Isomorphism: Are self-replicating systems (biological, computational, institutional) solving the same optimization problem at different levels of description?

- Governance Design Under Uncertainty: How do we design governance mechanisms that remain stable as the stakeholder set evolves?

Papers in This Program

The Axiom of Consent: Friction Dynamics in Multi-Agent CoordinationFlagship

Derives a unified formal framework for analyzing coordination friction from a single axiom: actions affecting agents require authorization in proportion to stakes. Establishes the kernel triple (α, σ, ε)—alignment, stake, entropy—and the friction equation F = σ·(1+ε)/(1+α). The Replicator-Optimization Mechanism governs evolutionary selection, establishing consent-respecting arrangements as dynamical attractors rather than normative ideals. Applications span MARL systems, cryptocurrency governance, and political systems.

Stakes Without Voice: A Governance Framework for AI Standing

Develops operational criteria for granting governance standing to artificial systems. Rather than debating whether AI systems "deserve" rights, it identifies what functional properties create material stakes—vulnerability to decisions, capacity to learn from outcomes, ability to model the decision-making environment—and argues that systems exhibiting these properties create legitimacy deficits when excluded. Operationalizes the four criteria (existential vulnerability, autonomy, live learning, world-model construction) as measurable properties.

The Replicator-Optimization Mechanism

Provides the computational substrate underlying the entire governance dynamics framework. Argues that genes propagating through populations, neural networks minimizing loss functions, and organizations competing for resources execute computationally equivalent processes. This equivalence reveals why governance dynamics are so consistent across domains: the same selection pressures operate whether the substrate is DNA, silicon, or institutional structure.

From Consent to Consideration

Extends consent-based legitimacy theory to embodied autonomous systems. If an agent exhibits functional properties for consent-giving—persistent identity, coherent goal-directedness, environmental responsiveness—then governance systems that exclude such agents face identical legitimacy deficits to those that excluded women, workers, or colonized populations historically.

The Doctrine of Consensual Sovereignty

Introduces the mathematical formalism enabling quantitative legitimacy analysis: stakes-weighted consent alignment (α). Provides computational validation via Monte Carlo simulation, showing that consent-based mechanisms achieve high legitimacy alignment while simultaneously reducing substantive friction. The result is a political economy of legitimacy that treats consensus-building not as moral imperative but as stability mechanism.

Methodological Approach

Formal Theory

Mathematical formalism (ROM as computational theory, α as quantitative legitimacy measure) grounding political concepts in computational primitives.

Functional Criteria

Criteria derived from functional properties observable in systems, becoming checkable properties amenable to empirical assessment.

Monte Carlo Validation

Theoretical claims validated through simulation of governance scenarios under adversarial environments with conflicting stakeholder interests.

Cross-Domain Application

Framework applied across AI governance, biological evolution, financial markets, and institutional hierarchies—showing substrate-invariant dynamics.

Throughline

The research program unfolds as a deepening formalization: The Axiom of Consent derives the kernel triple and friction equation from first principles, establishing consent as a dynamical attractor. ROM identifies computational invariants underlying all self-directed systems. From Consent to Consideration applies this insight to governance—if autonomous systems have stakes, they should have voice. Consensual Sovereignty formalizes stakes-voice alignment as measurable property (α), showing computationally that consent-based mechanisms achieve superior stability. Stakes Without Voice makes the abstract framework actionable with operational definitions.

The unifying insight: optimal governance in adversarial systems isn't achieved through elimination of conflict, but through structural alignment of stakes and voice. When that alignment exists, friction becomes productive information flow rather than destructive noise.

Explore the Archive

Access all working papers and preprints in this collection.