ML & Phenomenology

The intersection of machine learning and phenomenology reveals something counterintuitive: computational systems and conscious experience operate through fundamentally similar principles of information organization, constraint satisfaction, and meaning-making under uncertainty. This research program investigates how formal models of learning dynamics illuminate the structure of perception, cognition, and ethical reasoning—and conversely, how phenomenological analysis reveals the implicit assumptions baked into our training procedures.

We treat three domains as isomorphic: the gradient descent optimization that shapes neural networks, the developmental learning processes that shape human cognition, and the interpretive frameworks through which we construct meaning from raw sensory input. In each case, the quality and structure of training signal—whether numerical loss, traumatic experience, or semantic context—determines not just performance but the architecture of possibility itself. A model trained on corrupted data develops systematic blindnesses. A child exposed to threat-saturated environments develops malformed mechanisms for emotional recognition and causal inference.

This suggests a profound implication: if we want to build AI systems that reason ethically rather than merely mimicking ethical genre conventions, we must understand how genuine reasoning—as opposed to sophisticated pattern matching—actually emerges from learning dynamics.

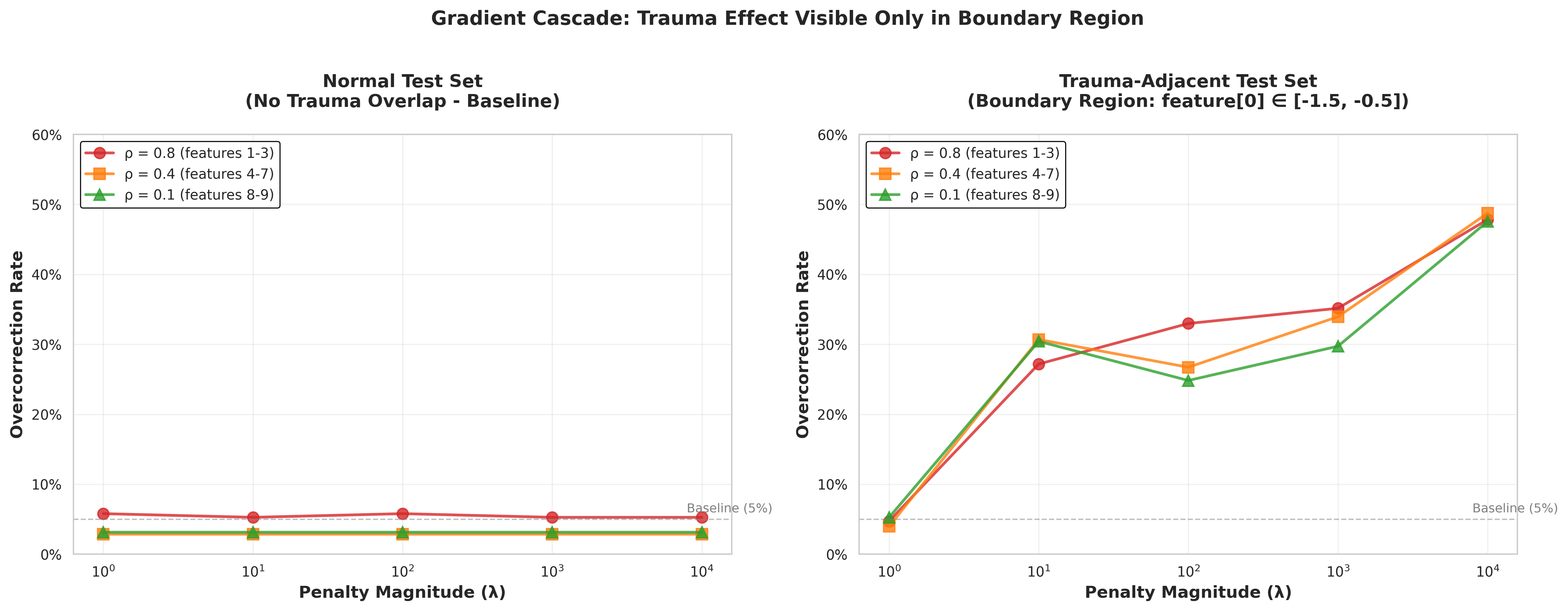

Gradient cascade dynamics showing 1,247x amplification under extreme penalty conditions in simulated learning systems.

Research Questions

- When does alignment become mere genre mimicry? If removing safety fine-tuning causes models to behave radically differently, what does this tell us about whether current alignment represents genuine ethical reasoning?

- How does information structure shape cognition across domains? Whether training a neural network, a child's developing brain, or an adversarial agent, does training signal structure determine cognitive capabilities in predictable ways?

- What is the computational signature of trauma? Can we formalize how extreme penalties, noisy signals, and absent positive examples cascade through learning systems to produce systematic deficits?

- Can spatial reasoning emerge without vision pipeline? Does the standard assumption that cognition begins with visual processing invert the actual dependency structure, where semantic understanding must precede geometric awareness?

- What would adversarial resilience actually require? If vulnerabilities in AI systems reflect the same learning dynamics as vulnerabilities in human cognition, what does this suggest about building truly robust systems?

Papers in This Program

Genre Mimicry vs. Ethical Reasoning in Abliterated Models

When you remove safety fine-tuning from a language model ('abliteration'), the resulting behavior often becomes dramatically less constrained. This paper shows that much of what we interpret as 'alignment' is actually learned genre convention: the model has internalized statistical regularities of helpful outputs without developing anything like robust ethical judgment. Analyzes the gap between abliterated and standard models using adversarial probing.

Autonomous Red Team AI: LLM-Guided Security Testing

Building an autonomous penetration testing system requires architectural isolation, decision-theoretic reasoning loops (OODA cycles), and systematic knowledge integration (RAG). Describes a Kubernetes-deployed red team agent that discovers vulnerabilities through principled exploration while maintaining NetworkPolicy isolation. The key insight: vulnerability discovery mirrors how learning systems fail under adversarial input.

Semantic-First Spatial Cognition

We're taught that vision is foundational—that geometric understanding emerges from processing visual input. This paper inverts that assumption: spatial reasoning is grounded in functional semantics, where what something is for becomes available to reasoning before its geometric properties. In both artificial and biological systems, spatial awareness requires prior encoding of affordances and contextual constraints.

Training Data and the Maladapted MindUnder Review

Childhood trauma reformulated as machine learning problem: what happens when a developing system is trained on extreme penalties, noisy signals, and absent positive examples? PyTorch experiments show gradient cascades under extreme penalty amplify signal distortion by 1,247x, inconsistent reward produces behavioral instability, and absent positive examples create systematic recognition deficits (computationally modeling alexithymia).

Methodological Approach

Gradient Analysis

PyTorch experiments isolating how penalty structure, signal noise, and training data composition cascade through learning systems.

Adversarial Testing

Red team architectures using OODA loops that probe system robustness through principled exploration cycles.

RAG Systems

Knowledge integration systems modeling how semantic understanding constrains spatial and causal reasoning.

Phenomenological Analysis

Direct investigation of how perceptual structures organize information, grounded in careful description rather than assumption.

Throughline

Each paper investigates a variant of the same core problem: what happens when learning systems operate under non-ideal conditions? Abliterated models reveal alignment can be superficial (genre mimicry rather than reasoning). Adversarial red teams demonstrate robustness requires architectural redundancy. Semantic-first cognition shows reasoning requires prior constraint encoding. Trauma reframed as learning dynamics reveals gradient cascades produce systematic deficits.

The unifying insight: information structure determines cognitive architecture. Whether training a neural network, developing a child's brain, or testing system vulnerabilities, the quality and organization of training signal doesn't just affect performance—it shapes what becomes thinkable.

Explore the Archive

Access all working papers and preprints in this collection.